Apache Kafka is a popular distributed message broker designed to handle large volumes of real-time data. A Kafka cluster is highly scalable and fault-tolerant.

It also has a much higher throughput compared to other message brokers like ActiveMQ and RabbitMQ.

Though it is generally used as a publish/subscribe messaging system, a lot of organizations also use it for log aggregation because it offers persistent storage for published messages.

Here at LinuxAPT, as part of our Server Management Services, we regularly help our Customers to perform Apache related queries.

In this context, we shall look into how to install and configure Kafka on Ubuntu 20.04.

How to install Apache Kafka on Ubuntu system ?

To begin with this installation task, you need to access your server as the root user with sudo rights.

Apache Kafka requires Java to be installed on your Ubuntu 20.04 machine.

Start by updating your OS by the following command:

$ sudo apt updateAfter the OS is updated, go ahead to install Java:

$ sudo apt install openjdk-11-jre-headlessThen, verify that Java is successfully installed on the system by running the command:

$ java --versionHow to Download Kafka on Ubuntu ?

Now, you have to download the Kafka source to your Ubuntu 20.04. It's highly recommended to download it from the official website of Apache Kafka: https://kafka.apache.org/downloads

At the time of writing this article, the latest version is 2.7.0.

You can download it by the following command:

$ cd $HOME

$ wget https://downloads.apache.org/kafka/2.7.0/kafka-2.7.0-src.tgzNext, create a new folder named as kafka-server in /usr/local directory:

$ sudo mkdir /usr/local/kafka-serverThen extract the downloaded source of Kafka to /usr/local/kafka-server directory:

$ sudo tar xf $HOME/kafka-2.7.0-src.tgz -C /usr/local/kafka-serverSince, you have already extracted Apache Kafka binary files, you can list the files by running:

$ ls /usr/local/kafka-server/kafka-2.7.0-src/bin/Now, it's time to make Kafka and Zookeeper run as daemons in Ubuntu 20.04.

To do this, you have to create Systemd unit files for both Kafka and Zookeeper.

How to create Systemd Unit files for Kafka and Zookeeper ?

Start by using your favorite editor to create two files as follows:

/etc/systemd/system/zookeeper.service

[Unit]

Description=Apache Zookeeper Server

Requires=network.target remote-fs.target

After=network.target remote-fs.target

[Service]

Type=simple

ExecStart=/usr/local/kafka-server/kafka-2.7.0-src/bin/zookeeper-server-start.sh /usr/local/kafka-server/kafka-2.7.0-src/config/zookeeper.properties

ExecStop=/usr/local/kafka-server/kafka-2.7.0-src/bin/zookeeper-server-stop.sh

Restart=on-abnormal

[Install]

WantedBy=multi-user.target/etc/systemd/system/kafka.service

[Unit]

Description=Apache Kafka Server

Documentation=http://kafka.apache.org/documentation.html

Requires=zookeeper.service

After=zookeeper.service

[Service]

Type=simple

Environment="JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64"

ExecStart=/usr/local/kafka-server/kafka-2.7.0-src/bin/kafka-server-start.sh /usr/local/kafka-server/kafka-2.7.0-src/config/server.properties

ExecStop=/usr/local/kafka-server/kafka-2.7.0-src/bin/kafka-server-stop.sh

Restart=on-abnormal

[Install]

WantedBy=multi-user.targetIn order to apply the changes, the systemd daemons need to be reloaded and you have to enable the services as well:

$ sudo systemctl daemon-reload

$ sudo systemctl enable --now zookeeper.service

$ sudo systemctl enable --now kafka.service

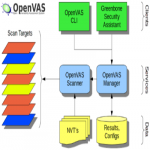

$ sudo systemctl status kafka zookeeperHow to install Cluster Manager for Apache Kafka (CMAK) on Ubuntu ?

Here, we will install the CMAK which stands for Cluster Manager for Apache Kafka. CMAK is an open-source tool for managing and monitoring Kafka services.

It was originally developed by Yahoo. In order to install CMAK, run the following commands:

$ cd $HOME

$ git clone https://github.com/yahoo/CMAK.gitHow to configure CMAK on Ubuntu ?

With your favorite editor, you can modify the CMAK configuration:

$ vim ~/CMAK/conf/application.confHere, we will configure the Zookeeper is localhost, let's change the value of cmak.zkhosts as localhost:2181

You can find the cmak.zkhosts at line 28.

Now, you have to create a zip file for the purpose of deploying the application:

$ cd ~/CMAK

$ ./sbt clean distIt will take about a minute to complete.

How to start the CMAK service on Ubuntu ?

i. Change into ~/CMAK/target/universal directory and extract the zip file:

$ cd ~/CMAK/target/universal

$ unzip cmak-3.0.0.5.zipii. After unzip the cmak-3.0.0.5.zip file, change to the directory, and execute the cmak binary:

$ cd cmak-3.0.0.5

$ bin/cmakBy default, the cmak service will run on port 9000.

Use the web browser and go to http://<ip-server>:9000

When you first access its interface, there will be no available cluster.

iii. Then, We have to add a new one by clicking on Add Cluster on Cluster drop-down list.

iv. Here, we filled up the form with the requested information: Cluster name, Cluster Zookeeper Hosts, Kafka Version and so on.

v. Leave other options with their default values then click Save.

Now the Cluster will be created successfully.

You can now create a sample topic.

vi. Assume that we're going to create a topic named "LinuxAPTTopic".

Bearing in mind that the CMAK is still running and launch a new terminal then run the following command:

$ cd /usr/local/kafka-server/kafka-2.7.0-src

$ bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 1 --partitions 1 --topic LinuxAPTTopicThis command will create the topic "LinuxAPTTopic".

vii. Finally, Go to cluster view then click Topic > List.

[Need urgent assistance to install missing packages on Ubuntu Linux System? We are available to help you today.]

Conclusion

This article covers how to install and configure Apache Kafka on your Ubuntu 20.04 LTS machine. Apache Kafka is a distributed events streaming platform which has the ability to handle the high-performance data pipelines. It was originally developed by Linkedin then to be public as an open-source platform and used by many IT companies in the world.

Terms related to Apache Kafka Infrastructure:

1. Topic: A topic is a common name used to store and publish a particular stream of data. For example if you would wish to store all the data about a page being clicked, you can give the Topic a name such as "Added Customer".

2. Partition: Every topic is split up into partitions ("baskets"). When a topic is created, the number of partitions need to be specified but can be increased later as need arises. Each message gets stored into partitions with an incremental id known as its Offset value.

3. Kafka Broker: Every server with Kafka installed in it is known as a broker. It is a container holding several topics having their partitions.

4. Zookeeper: Zookeeper manages Kafka's cluster state and configurations.

Main advantages of using Apache Kafka:

1. Message Broking: In comparison to most messaging systems Kafka has better throughput, built-in partitioning, replication, and fault-tolerance which makes it a good solution for large scale message processing applications

2. Website Activity Tracking

3. Log Aggregation: Kafka abstracts away the details of files and gives a cleaner abstraction of log or event data as a stream of messages.

4. Stream Processing: capturing data in real-time from event sources; storing these event streams durably for later retrieval; and routing the event streams to different destination technologies as needed

5. Event Sourcing: This is a style of application design where state changes are logged as a time-ordered sequence of records.

6. Commit Log: Kafka can serve as a kind of external commit-log for a distributed system. The log helps replicate data between nodes and acts as a re-syncing mechanism for failed nodes to restore their data.

7. Metrics: This involves aggregating statistics from distributed applications to produce centralized feeds of operational data.

To install Apache Kafka on Ubuntu:

1. Update your fresh Ubuntu 20.04 server and get Java installed as illustrated below.

$ sudo apt update && sudo apt upgrade

$ sudo apt install default-jre wget git unzip -y

$ sudo apt install default-jdk -y2. Fetch Kafka on Ubuntu 20.04.

$ cd ~

$ wget https://downloads.apache.org/kafka/2.6.0/kafka_2.13-2.6.0.tgz

$ sudo mkdir /usr/local/kafka-server && cd /usr/local/kafka-server

$ sudo tar -xvzf ~/kafka_2.13-2.6.0.tgz --strip 13. Create Kafka and Zookeeper Systemd Unit Files

i. Let us begin with Zookeeper service.

$ sudo vim /etc/systemd/system/zookeeper.service

[Unit]

Description=Apache Zookeeper Server

Requires=network.target remote-fs.target

After=network.target remote-fs.target

[Service]

Type=simple

ExecStart=/usr/local/kafka-server/bin/zookeeper-server-start.sh /usr/local/kafka-server/config/zookeeper.properties

ExecStop=/usr/local/kafka-server/bin/zookeeper-server-stop.sh

Restart=on-abnormal

[Install]

WantedBy=multi-user.target

ii. Then for Kafka service. Make sure your JAVA_HOME configs are well inputted or Kafka will not start.

$ sudo vim /etc/systemd/system/kafka.service

[Unit]

Description=Apache Kafka Server

Documentation=http://kafka.apache.org/documentation.html

Requires=zookeeper.service

After=zookeeper.service

[Service]

Type=simple

Environment="JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64"

ExecStart=/usr/local/kafka-server/bin/kafka-server-start.sh /usr/local/kafka-server/config/server.properties

ExecStop=/usr/local/kafka-server/bin/kafka-server-stop.sh

Restart=on-abnormal

[Install]

WantedBy=multi-user.target

iii. Reload the systemd daemon to apply changes and then start the services.

$ sudo systemctl daemon-reload

$ sudo systemctl enable --now zookeeper

$ sudo systemctl enable --now kafka

$ sudo systemctl status kafka zookeeper

4. Install Cluster Manager for Apache Kafka (CMAK) | Kafka Manager.

$ cd ~

$ git clone https://github.com/yahoo/CMAK.git

5. Configure CMAK on Ubuntu.

This article covers how to install and configure Apache Kafka on your Ubuntu 20.04 LTS machine. Apache Kafka is a distributed events streaming platform which has the ability to handle the high-performance data pipelines. It was originally developed by Linkedin then to be public as an open-source platform and used by many IT companies in the world.

Terms related to Apache Kafka Infrastructure:

1. Topic: A topic is a common name used to store and publish a particular stream of data. For example if you would wish to store all the data about a page being clicked, you can give the Topic a name such as "Added Customer".

2. Partition: Every topic is split up into partitions ("baskets"). When a topic is created, the number of partitions need to be specified but can be increased later as need arises. Each message gets stored into partitions with an incremental id known as its Offset value.

3. Kafka Broker: Every server with Kafka installed in it is known as a broker. It is a container holding several topics having their partitions.

4. Zookeeper: Zookeeper manages Kafka's cluster state and configurations.

Main advantages of using Apache Kafka:

1. Message Broking: In comparison to most messaging systems Kafka has better throughput, built-in partitioning, replication, and fault-tolerance which makes it a good solution for large scale message processing applications

2. Website Activity Tracking

3. Log Aggregation: Kafka abstracts away the details of files and gives a cleaner abstraction of log or event data as a stream of messages.

4. Stream Processing: capturing data in real-time from event sources; storing these event streams durably for later retrieval; and routing the event streams to different destination technologies as needed

5. Event Sourcing: This is a style of application design where state changes are logged as a time-ordered sequence of records.

6. Commit Log: Kafka can serve as a kind of external commit-log for a distributed system. The log helps replicate data between nodes and acts as a re-syncing mechanism for failed nodes to restore their data.

7. Metrics: This involves aggregating statistics from distributed applications to produce centralized feeds of operational data.

To install Apache Kafka on Ubuntu:

1. Update your fresh Ubuntu 20.04 server and get Java installed as illustrated below.

$ sudo apt update && sudo apt upgrade

$ sudo apt install default-jre wget git unzip -y

$ sudo apt install default-jdk -y2. Fetch Kafka on Ubuntu 20.04.

$ cd ~

$ wget https://downloads.apache.org/kafka/2.6.0/kafka_2.13-2.6.0.tgz

$ sudo mkdir /usr/local/kafka-server && cd /usr/local/kafka-server

$ sudo tar -xvzf ~/kafka_2.13-2.6.0.tgz --strip 13. Create Kafka and Zookeeper Systemd Unit Files

i. Let us begin with Zookeeper service.

$ sudo vim /etc/systemd/system/zookeeper.service

[Unit]

Description=Apache Zookeeper Server

Requires=network.target remote-fs.target

After=network.target remote-fs.target

[Service]

Type=simple

ExecStart=/usr/local/kafka-server/bin/zookeeper-server-start.sh /usr/local/kafka-server/config/zookeeper.properties

ExecStop=/usr/local/kafka-server/bin/zookeeper-server-stop.sh

Restart=on-abnormal

[Install]

WantedBy=multi-user.targetii. Then for Kafka service. Make sure your JAVA_HOME configs are well inputted or Kafka will not start.

$ sudo vim /etc/systemd/system/kafka.service

[Unit]

Description=Apache Kafka Server

Documentation=http://kafka.apache.org/documentation.html

Requires=zookeeper.service

After=zookeeper.service

[Service]

Type=simple

Environment="JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64"

ExecStart=/usr/local/kafka-server/bin/kafka-server-start.sh /usr/local/kafka-server/config/server.properties

ExecStop=/usr/local/kafka-server/bin/kafka-server-stop.sh

Restart=on-abnormal

[Install]

WantedBy=multi-user.targetiii. Reload the systemd daemon to apply changes and then start the services.

$ sudo systemctl daemon-reload

$ sudo systemctl enable --now zookeeper

$ sudo systemctl enable --now kafka

$ sudo systemctl status kafka zookeeper4. Install Cluster Manager for Apache Kafka (CMAK) | Kafka Manager.

$ cd ~

$ git clone https://github.com/yahoo/CMAK.git5. Configure CMAK on Ubuntu.