It is very important to improve bulk data import performance. Because, usually, when migrating the databases we may come across performance issues during data load if the database sizes are big.

Here at Ibmi Media, as part of our Server Management Services, we regularly help our Customers to Optimize and improve performances of their Servers.

In this context, we shall look into how to improve bulk data import performance.

How to improve bulk data import performance in SQL Server ?

Recently, we received a request from our customer where we had to import bulk data. However, it would take a long time for the importing process to complete. So we decided to work on reducing import process time.

Here are the different factors that we configured from the database side to reduce the import time and improve the performance.

1. Increment AutoGrow settings to improve performance during bulk data import

We can use Autogrow settings to automate SQL Server database file growth. Even though this is a useful property it can degrade the bulk import performance or data load operations.

So we increment the Autogrow setting to a large value to avoid the performance penalties.

In case, if the value is set to default or a small value during bulk import or data load, the database engine will have to perform a data file Autogrow operation every time the file will be filled with data till the maximum size limit.

As a result, many Autogrow operations will take more resources like IO, CPU, etc, and time to complete the data load transaction.

2. Enable Instant File Initialization to improve bulk data import performance

We make sure to enable IFI (Instant File Initialization) for the SQL Server instance in order to reduce the total time SQL Server takes to set the database size.

Also, we turn ON the IFI to speed up the AutoGrow process. If we set a large value to AutoGrow settings, then it will take some time to increase the size by zeroing the disk address. Thus IFI speeds up this process and allows to create an enormous size of data files quicker.

3. Auto Shrink

AutoShrink is a very expensive operation in terms of I/O, CPU usage, locking, and transaction log generation. So it should be always OFF even during the daily and normal operations.

So we turn it OFF immediately. Also, we ensure that the disk has enough space to sustain during bulk operations or data load. Shrinking and growing the data files on a repeat can cause file-system level fragmentation, which can slow down performance. It also takes a lot of CPU and IO.

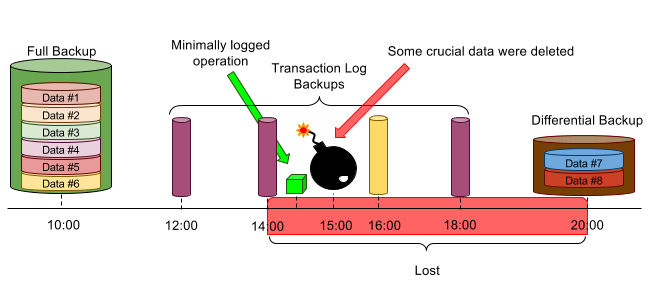

4. Reduce Logging

In order to reduce the logging operations, we change the recovery model to SIMPLE or BULK-LOGGED. The simple recovery model logs most bulk operations. If a database is under the full recovery model then all row-insert operations that are performed during bulk import will be completely logged in the transaction log.

For large data imports, this might cause the transaction log to fill immediately.

So for bulk-import operations, minimal logging is better than full logging. This will reduce the possibility of filling the log space while bulk-import operation. To minimally log a bulk-import operation on a database that normally uses the full recovery model, we first switch the database to the bulk-logged recovery model. After bulk importing the data, we switch back the recovery model to the full recovery model.

5. Disable Auto_Update_Statistics during bulk data import

Auto_Update_Statistics is a very useful property. It must be set to ON during normal business operations. However, it is better to turn it off during a huge data load or bulk import operations. Because it also takes resources from the system to update the statistics.

This can lead to performance issues during bulk data migration. So we turn it off and once data load or bulk data inserts will be performed, we will again enable it.

6. Auto_Create_Statistics

This must be OFF because creating statistics also takes resources from the system that can lower the overall bulk import performance.

But we ensure to enable it to post data load or bulk inserts operation.

7. Parallelism – MAXDOP

We configure the max degree of parallelism rather than the default. Also, we assign half of the CPU cores for the parallel processes during data load and bulk import operations. MAXDOP significantly reduces total data load time. Lastly, we ensure to revert the change to its original value as per the application best practices.

8. Using Batches to improve performance

We use an optimum batch size so that it will increase the bulk data import performance. It would be problematic to import a large set of data as a single batch. So bcp and BULK INSERT helps in importing data in a series of batches.

Each batch is imported and logged in a separate transaction. Then after a given transaction is committed, the rows imported by that transaction are committed. In case, the operation fails, then only rows imported from the current batch are rolled back. Then we can resume importing data starting at the beginning of the failed batch rather than at the beginning of the data file.

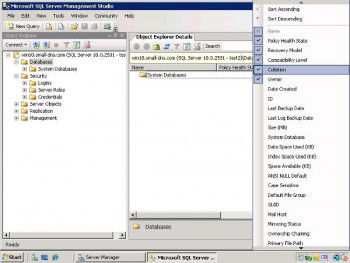

9. Dropping Indexes

We can drop indexes during Bulk Load operation and then once it is completed we can recreate them.

To disable/enable indexes in SQL Server:

–Disable Index ALTER INDEX [IX_Users_UserID] SalesDB.Users DISABLE–Enable Index ALTER INDEX [IX_Users_UserID] SalesDB.Users REBUILD[Need urgent assistance in improving the performance? – We'll help you. ]

Conclusion

This article will guide you improve #bulk #data #import #performance. The BULK INSERT command is much faster than bcp or the data pump to perform text file import operations, however, the BULK INSERT statement cannot bulk copy data from #SQL Server to a data file. Use the bcp utility instead of DTS when you need to export data from the SQL Server table into a text file.

To Speed up SQL Bulk Insert with #transactions:

1. Declare the variables that store the number of desired items ( @items ), the size of the chunks( @chunk_size ) and the counter of the number of executed inserts( @counter )

2. Open the first transaction and initialize the while loop.

This article will guide you improve #bulk #data #import #performance. The BULK INSERT command is much faster than bcp or the data pump to perform text file import operations, however, the BULK INSERT statement cannot bulk copy data from #SQL Server to a data file. Use the bcp utility instead of DTS when you need to export data from the SQL Server table into a text file.

To Speed up SQL Bulk Insert with #transactions:

1. Declare the variables that store the number of desired items ( @items ), the size of the chunks( @chunk_size ) and the counter of the number of executed inserts( @counter )

2. Open the first transaction and initialize the while loop.