Are you facing the error, Broken Docker Swarm Cluster ? This guide will help you.

Here at Ibmi Media, as part of our Server Management Services, we regularly help our Customers to perform related Docker queries.

Main Causes of Broken Docker Swarm Cluster

The deployed application does not work due to networking issues.

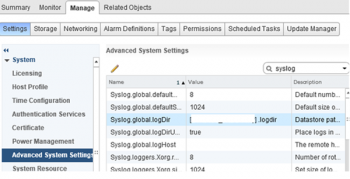

1. A lot of error messages in /var/log/syslog on several workers and nodes from dockerd:

~~

level=warning msg=”cannot find proper key indices while processing key update”

level=error msg=”agent: session failed” error=”rpc error: code = Aborted desc = dispatcher is stopped”

level=warning msg=”memberlist: Failed fallback ping: No installed keys could decrypt the message”

level=warning msg=”memberlist: Decrypt packet failed: No installed keys could decrypt the message

~~

2. netstat -tnlp does not show usual dockerd bindings on a leader manager.

3. After docker swarm leave, the manager will not see updated nodes.

4. Deployment is hung up.

5. The reboot of a worker or manager is not fixing the broken state of the cluster.

Prior to this issue, we have got quite a lot of errors with "dispatcher is stopped" from all nodes.

Usually, in case of "dispatcher is stopped" error, cluster usually re-initializes communication automatically and no administrator intervention is necessary.

Suppose there are three managers in docker swarm, which are periodically selecting a leader. However, this process failed and manager-0 became unreachable from other nodes.

Then, the other two manager nodes did not set new quorum because of the stopped dispatcher and the cluster did not change the status of manager-0.

Unfortunately, manager-0 was not able to renew the docker network and other managers and worker nodes have become unreachable.

How to fix Broken Docker Swarm Cluster ?

First and foremost, the solution is to destroy and reinitialize the whole cluster.

Then, Reinitialize docker swarm cluster.

To do this, follow the steps given below:

1. Optional if used, save tags assigned on nodes for later reassignment. On manager node, run this script:

~~

for node in $(docker node ls –filter role=worker –format ‘{{ .Hostname }}’);

do

tags=$(docker node inspect “$node” -f ‘{{.Spec.Labels}}’ |\

sed -e ‘s/^map\[//’ -e ‘s/\]$//’)

printf “%s: %s\n” “$node” “$tags”

done | sort

~~

Next, we can assign it later back with:

$ docker node update –label-add <TAG>=true <WORKER>

2. On each node, force to leave:

$ docker swarm leave –force

3. On each node, restart service:

$ systemctl restart docker.service

4. On manager, create a new cluster:

$ docker swarm init –availability drain –advertise-addr <INTERNAL IP>

Internal IP address is the intranet IP of cluster which will be used for communication between nodes.

5. Then, generate tokens for the manager/worker node invitation:

~~

$ docker swarm join-token manager

To add a manager to this swarm, run the following command:

docker swarm join –token SWMTKN-1-6bsyhhxe3txagx… 172.30.0.58:2377

$ docker swarm join-token worker

To add a worker to this swarm, run the following command:

docker swarm join –token SWMTKN-1-6bsyhhxe3txagy… 172.30.0.58:2377

~~

The previous command’s output are commands for joining the cluster. Copy-paste it to them.

6. On manager, confirm that nodes have joined the cluster:

$ docker node ls

Also set availability of manager nodes to Drain:

$ docker node update –availability drain <HOSTNAME>

Manager availability is by default Active, but if we do not want it to run any containers set it to Drain.

7. Optional for tags, add it to nodes now:

$ docker node update –label-add <TAG>=true <WORKER>

8. Finally, deploy the stack again:

$ docker stack deploy -c docker-compose.yml <STACK_NAME>

[Need urgent assistance in fixing Docker errors? – We're available 24*7. ]

Conclusion

This article covers methods to fix Broken Docker Swarm Cluster. To resolve this error, simply Reinitialize docker swarm cluster.

This article covers methods to fix Broken Docker Swarm Cluster. To resolve this error, simply Reinitialize docker swarm cluster.