With this guide Nagios Log Server Administrators and Users who want to customize their Nagios Log Server Input Filters.

We can apply filters to messages before we sent them to Elasticsearch for indexing.

Here at Ibmi Media, as part of our Server Management Services, we regularly help our Customers to perform related Nagios queries.

In this context, we shall look into how to customize Nagios Log Server Filters.

How to Configure filters in Nagios log server ?

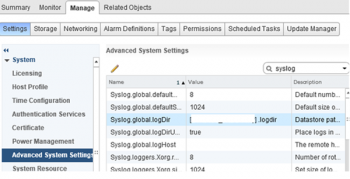

Nagios Log Server is a cluster-oriented application that uses Logstash to receive and process logs. The base configuration provided with a default installation of Nagios Log Server has all of the filters defined as part of the Global Config. The Global Config is an easy way to set up the same Logstash configuration on all our instances.

To access the configuration, navigate to Configure > Global (All Instances) > Global Config.

The two main tables on the Global Config page include Inputs and Filters (and a hidden third table called Outputs). Inputs, Filters and Outputs are all used by Logstash to process incoming log data and do something with it, which normally is to store it in the Elasticsearch database.

Filters

The filters are in a structured format like this:

<filter_type> {

<filter_action> => [ '<matching_field>', '<matching_pattern>' ]

}To note, <filter_type> is the filter plugin that will be used for the <filter_action>. Logstash allows a large amount of possible filter types.

Logstash uses the plugin Grok for making specific filters using regular expressions and matching.

The grok plugin allows a lot of customization that helps us heavily to configure custom filters in Nagios Log Server configuration:

- <filter_action> is the action that will be taken using the filter type. Common grok actions are match, add_field and add_tag.

- <matching_field> will be the log field that is being processed. When Logstash receives logs from sources like syslog, the received data is categorized by certain fields (like the message field that contains some text about the log entry). We can choose things like hostname, severity, message and many more.

- <matching_pattern> relates to the pattern that will be looked for in the <matching_field>, the data matched by the pattern(s) can be turned into new fields stored in the Elasticsearch database.

For example, the field message will be the <matching_field>. Here is an example of a line from an Apache error_log:

[Sun May 2 12:45:58 2021] [error] [client 192.168.1.9] File does not

exist: /usr/local/nagiosxi/html/someURL

This message from the Apache error_log is showing that the someURL file does not exist. It shows the time the notice was posted, the type [error], the client trying to access the someURL file, the log message and the path that generated the request error.

It is important that the data in this line is following a structured format, every time an entry is received from this input the structure of the entry will always be the same. Identifying this structure is done by using the <matching_pattern> to break this string of text into fields.

To take control of this message and filter the whole message or parts of it, we will use a grok pattern that relates to the pattern of the message.

The filter shown below will be applied to any logs with the apache_error program name.

This is one of the two default filters that are present inside Nagios Log Server:

if [program] == 'apache_error' {

grok {

match => ['message', '\[(?<timestamp>%{DAY:day} %{MONTH:month} %

{MONTHDAY} %{TIME} %{YEAR})\] \[%{WORD:class}\] \[%{WORD:originator} %

{IP:clientip}\] %{GREEDYDATA:errmsg}']

}

mutate {

replace => ['type', 'apache_error']

}

}Match

The line starts off like this:

match => ['message', '

The 'message' is the <matching_field> field that contains the data we want to break into fields. It should be within single quotes. We can also see that the square bracket [ is used to begin the match definition (filter structure). A single quote is then used to start the <matching_pattern>.

Time stamp

The first part of the <matching_pattern> is to handle the date/time stamp.

Here is the example from the log file:

[Sun May 2 12:45:58 2021]

Here is the pattern:

\[(?<timestamp>%{DAY:day} %{MONTH:month} %{MONTHDAY} %{TIME} %{YEAR})\]The words in CAPITALS represent the patterns to be matched, like %{MONTHDAY} which corresponds to 22 in the string. The words in CAPITALS is how Logstash makes it easy for us to be able to use regular expressions.

If we look at %{MONTH:month}, the :month is taking the value for %{MONTH} and turning it into a field that is stored in the Elasticsearch database. This “:<field_name>” syntax is how we create new fields in the Elasticsearch database.

(?<timestamp>

Sometimes logstash does not have a pattern we need. For this, we can use the Oniguruma syntax for named capture which will let us match a piece of text and save it as a field.

(?<field_name>the pattern here)

The ?<timestamp> is storing the whole pattern string into a field called timestamp that gets stored in the Elasticsearch database. Round brackets () surrounds the whole pattern. The square brackets (\[ and \] )are very important.

A backlash \ precedes square brackets because the backslash tells the grok pattern to treat the square bracket as plain text. Otherwise, the square bracket will make Logstash think it is part of the filter structure and will result in an invalid configuration. This practice of using a backslash is called “escaping”.

To summarize, the pattern for the date/time stamp is creating the following fields in the Elasticsearch database:

timestamp, day, month

The next part of the pattern is as follows:

\[%{WORD:class}\]It applies to this text ‘[error]”.

The pattern WORD is an expression to say that the next pattern to match is a single word without spaces (the range is A-Za-z0-9_). Elasticsearch database stores it as the field called class. Once again the square brackets escapes as they form part of the string.

However, to make it very clear, here is that same example with the last piece of the date/time stamp pattern:

%{YEAR})\] \[%{WORD:class}\]It is very important that the overall pattern we are defining is accurate. Otherwise, tit will not match the pattern and the log data will not be broken up into fields in the Elasticsearch database.

IP address

The next part of the pattern is as follows:

\[%{WORD:originator} %{IP:clientip}\]Which applies to this text:

[client 192.168.1.9]

Elasticsearch database stores the first WORD as the field called originator.

The pattern IP is an expression that will identify an IPv4 or IPv6 address, this will be stored in the Elasticsearch database in the field called clientip.

The last part of the pattern is as follows:

%{GREEDYDATA:errmsg}' ]Which applies to this text:

File does not exist: /usr/local/nagiosxi/html/someURL

The pattern GREEDYDATA is an expression that takes all the remaining text. Elasticsearch database stores it as the field errmsg.

The single quote ends the entire <matching_pattern> and the square bracket ] ends the filter structure.

Mutate

Mutate plugin allows us to replace or append a value in any field with a new value that may replace the whole field or add, delete or append portions of it. Here is the mutate line from earlier:

mutate {

replace => [ 'type', 'apache_error' ]

}In our example, we are changing the log type which would be syslog and replace it with apache_error. This allows us to differentiate between normal syslogs and apache syslogs.

Filter Conditions

We can restrict a filter to certain logs by using a simple if statement:

if [program] == 'apache_error' {

grok {

}

mutate {

}

}Here we can see that the [program] must be apache_error for these filters to be applied. Thus, the log received by Logstash know that the program is apache_error.

When we run the setup script on our Linux server that is running Apache, it will define the name as apache_error.

In Nagios Log Server, navigate to Configure > Add Log Source and select Apache Server. Under the ‘Run the Script heading’, we can see the following line of code:

$ sudo bash setup-linux.sh -s nagios_log_server -p 5544 -f

"/var/log/httpd/error_log" -t apache_error

We can see that apache_error is defined as part of the setup script. The syslog application on the sending server will define these log entries as coming from apache_error.

Patterns

Patterns turn complex regular expressions and define them as understandable words. For example:

MONTHDAY = (?:(?:0[1-9])|(?:[12][0-9])|(?:3[01])|[1-9])

GREEDYDATA = .*

WORD = \b\w+\b

Filter Configuration Options

Navigate to Configure > Global (All Instances) > Global Config. The Filters table contains several preconfigured filters called blocks. The blocks have several icons.

The Active box next to each filter indicates that they are active. When we click the Active box, it will mark the as Inactive. Next time, when it applies the configuration, this filter will not be part of the running configuration. This allows us to save filters even if we do not want to use them right away.

Clicking the box again will toggle it back to Active:

i. Rename : Allows us to rename the block.

ii. Open/Close : This will expand the block to show the filter configuration in a text field. The icon will change to a hyphen when open, clicking the hyphen will collapse the block.

iii. Copy : This will create a duplicate of that block and allows to easily create a new filter based off the configuration of an existing filter.

iv. Remove : Delete a block.

Click the Save button to save the changes. Else, when we navigate away from the page, all our changes will be lost.

Adding A Filter

The best way to learn how filters work is to add one and then send some log data. The following example is going to rely on the File Test Input.

To add an input, click the Add Input drop down list and select Custom. A new block will appear at the bottom of the list of Inputs. Type a unique name for the input. In the text field, we will need to define the input

configuration. Here is a basic example for a local file on the Nagios Log Server machine itself:

file {

type => "testing"

path => "/tmp/test.log"

}Once we have finished, click the Save button.

Apply configuration

For the new input to become active, we will need to Apply Configuration. However, we need to verify the configuration first.

To apply the configuration, in the left hand pane under Configure click Apply Configuration. Click the Apply button and then on the modal that appears, click the Yes, Apply Now button. When it completes, the screen will refresh with a completed message.

On the Global Config page, click the Add Filter drop down list and select Custom.

A new filter will appear at the bottom of the list of Filters. Type a unique name for the filter.

In the text field, we will need to define the filter configuration.

Paste the following example:

if [type] == 'testing' {

grok {

match => [ 'message', '%{WORD:first_word} %{WORD:second_word} %

{GREEDYDATA:everything_else}' ]

}

}Once we have finished, click the Save button.

For the new filter to become active, we will need to Apply Configuration.

Apply Configuration

To apply the configuration, in the left hand pane under Configure click Apply Configuration. Click the Apply button and then on the modal that appears click the Yes, Apply Now button.

Wait while it applies the configuration. When it completes, the screen will refresh with a completed message.

Test Filter

Using the filter example created previously, we can confirm the working of the filter with these steps. Establish a terminal session to Nagios Log Server instance and then execute the following command:

echo "This is a test log entry" >> /tmp/test.log

Now in Nagios Log Server, open the Dashboards page and perform the query type:testing.

The query should return one result in the ALL EVENTS panel.

Clicking on the log entry will show the full details about the entry.

Here we can see that the filter successfully broke the message field into the three fields of first_word (this), second_word (is) and everything_else (a test log entry).

It is relatively simple to create a filter to break apart the log data into separate fields. It is very important the overall pattern we are defining is accurate. Otherwise, it will not match the pattern and the log data will not be broken up into fields in the Elasticsearch database.

Verify Configuration

The Verify button ensures that the current saved configuration is valid. It can be useful when updating our configurations before attempting to Apply Configuration.

Wait while the configuration is verified.

If we do not receive a Configuration is OK message, then we will need to fix the problem before applying the configuration.

Save vs Save & Apply

There are two separate buttons, Save and Save & Apply.

Save allows to make changes, but not make the changes become immediately active. It allows us to navigate away from the Configure screen without losing our work.

Whereas, Save & Apply will save our changes and then make the changes become immediately active.

View Logstash configuration

The View button allows us to view the raw Logstash configuration. When we click the button, we have a choice of selecting an individual config or a combination of all the configs.

This will open a new modal window that allows to view and copy the configuration.

[Need urgent assistance to configure filters in Nagios log server? – We're available 24*7 ]

Conclusion

This article covers method to customize Nagios Log Server Filters. Basically, to configure filters in Nagios log server, one needs to be familiar with the Filter Configuration options available within the log server.

Filters can be applied to messages before they are sent to Elasticsearch for indexing. They perform actions such as breaking apart messages into fields for easy searching, adding geo location information, resolving IP to DNS names and dropping messages you do not want indexed.

This article covers method to customize Nagios Log Server Filters. Basically, to configure filters in Nagios log server, one needs to be familiar with the Filter Configuration options available within the log server.

Filters can be applied to messages before they are sent to Elasticsearch for indexing. They perform actions such as breaking apart messages into fields for easy searching, adding geo location information, resolving IP to DNS names and dropping messages you do not want indexed.