Are you trying to configure software raid on Linux using MDADM?

This guide will help you.

MDADM is a tool that allows to create and manage software RAIDs on Linux.

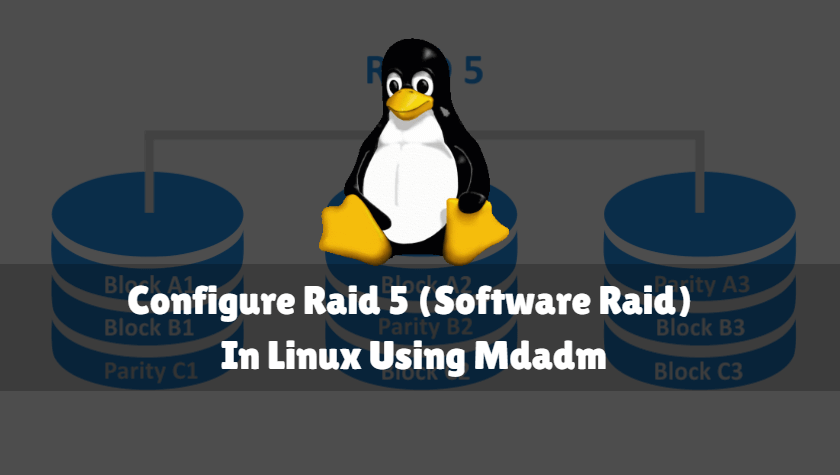

RAID stands for Redundant Array of Inexpensive Disks. RAID allows you to turn multiple physical hard drives into a single logical hard drive.

To set up RAID, we can either use a hard drive controller or use any software to create it.

Here at Ibmi Media, as part of our Server Management Services, we regularly help our Customers to configure software RAID on Linux using MDADM.

In this context, you will learn how to use mdadm (multiple disks admin) to create RAID array storage, add and manage disks, add a hot-spare and more.

How to Configure Software RAID on Linux using MDADM ?

MDADM is a tool that allows to creation and management of software RAIDs on Linux.

Before going into the steps for configuring we will check the prerequisites for the setup.

Following are the prerequisites:

1. Firstly, we need to have a Linux distribution installed on a hard drive.

2. Then two hard drives ‘/dev/vdb’ and ‘/dev/vdc’.

3. Next, we need a special file system on /dev/vdb and /dev/vdc.

4. Finally the RAID 1 array using the MDADM utility.

Steps to Configure Software RAID on Linux using MDADM ?

Following are the steps that our Support Experts follow to configure software RAID:

1. Installing MDADM

We can run the following installation command depending upon the operating system:

For CentOS/Red Hat we can use the following command:

# yum install mdadmFor Ubuntu/Debian we can use the following command:

# apt-get install mdadmThis will install mdadm and the dependent libraries.

2. How to create RAID 1 (Mirror) using two disks on Linux

We can create RAID1 using the following steps:

i. Firstly, we have to zero all superblocks on the disks to be added to the RAID using the following command:

# mdadm --zero-superblock --force /dev/vd{b,c}The two clean disks are vdb and vdc.

mdadm: Unrecognised md component device - /dev/vdb

mdadm: Unrecognised md component device - /dev/vdcThe above results mean that neither of the disks has ever been added to an array.

ii. Then create a software RAID1 from two disks to the device /dev/md0, using the following command:

# mdadm --create --verbose /dev/md0 -l 1 -n 2 /dev/vd{b,c}iii. After that, to create RAID0 (Stripe) to improve read/write speed due to parallelizing commands between several physical disks, using the following command:

# mdadm --create --verbose /dev/md0 --level=0 --raid-devices=2 /dev/vdb /dev/vdcFor RAID 5 of three or more drives we can use the following command:

# mdadm --create --verbose /dev/md0 --level=5 --raid-devices=3 /dev/vdb /dev/ vdc /dev/vddiv. When we list the information about our disks, we will see our RAID md0 drive:

We can use the following command for listing:

# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

vda 253:0 0 20G 0 disk

├─vda1 253:1 0 512M 0 part /boot

└─vda2 253:2 0 19.5G 0 part /

vdb 253:16 0 20G 0 disk

└─md0 9:0 0 20G 0 raid1

vdc 253:32 0 20G 0 disk

└─md0 9:0 0 20G 0 raid1v. Then, in order to create an ext4 file system on RAID1 drive, we can run the following command:

# mkfs.ext4 /dev/md0vi. After that, we can create a backup directory and mount the RAID device to it:

# mkdir /backup

# mount /dev/md0 /backup/

# df -hvii. In order not to mount the device each time manually, make the following changes to fstab:

# nano /etc/fstab

/dev/md0 /backup ext4 defaults 1 23. How to view state or check the integrity of a RAID Array

i. Firstly, we can check data integrity in the array, use the following command:

# echo 'check' > /sys/block/md0/md/sync_actionii. Then we can view the output of the following file:

# cat /sys/block/md0/md/mismatch_cntIf we get 0, our array is OK.

iii. For stopping the check, we can run the following:

# echo 'idle' > /sys/block/md0/md/sync_actioniv. To check the state of all RAIDs available on the server, use this command:

# cat /proc/mdstat

Personalities : [raid1]

md0 : active raid1 vdc[1] vdb[0]

20954112 blocks super 1.2 [2/2] [UU]v. We can view more detailed information about the specific RAID using the following command:

# mdadm -D /dev/md0vi. After that, we can view the brief information using fdisk:

# fdisk -l /dev/md0

Disk /dev/md0: 21.5 GB, 21457010688 bytes, 41908224 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 512 bytes / 512 bytes4. How to Recover from a Disk Failure in RAID

If one of the disks in a RAID fails or gets damaged, we can replace it with another one.

We can use the following steps to recover a disk failure in RAID:

i. Firstly we need to find out if the disc is damaged and needs to be replaced. This can be done with the following command:

# cat /proc/mdstat

Personalities : [raid1]

md0 : active raid1 vdb[0]

20954112 blocks super 1.2 [2/1] [U_]From the previous command, we can see that only one disk is active. Since [U_] means that a problem exists.

When both disks are healthy, the output will be [UU].

ii. For getting more details about this we can use the following command:

# mdadm -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Tue Dec 31 12:39:22 2020

Raid Level : raid1

Array Size : 20954112 (19.98 GiB 21.46 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Tue Dec 31 14:41:13 2020

State : clean, degraded

Active Devices : 1

Working Devices : 1

Failed Devices : 1

State : clean, degradedThe last line shows that the one disk in the RAID is damaged.

In our case, /dev/vdc must be replaced.

iii. To restore the array, we must remove the damaged disk and add a new one.

For removing the failed drive we can use the following command:

# mdadm /dev/md0 --remove /dev/vdciv. And for adding a new disk to the array we can use the following command:

# mdadm /dev/md0 --add /dev/vddv. Disk recovery will start automatically after we add a new disk we can check this with the following command:

# mdadm -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Tue Dec 31 12:39:22 2020

Raid Level : raid1

Array Size : 20954112 (19.98 GiB 21.46 GB)

Used Dev Size : 20954112 (19.98 GiB 21.46 GB)

Raid Devices : 2

Total Devices : 2

Persistence : Superblock is persistent

Update Time : Tue Dec 31 14:50:20 2020

State : clean, degraded, recovering

Active Devices : 1

Working Devices : 2

Failed Devices : 0

Spare Devices : 1

Consistency Policy : resync

Rebuild Status : 48% complete

Name : host1:0 (local to host host1)

UUID : 9d59b1fb:7b0a7b6d:15a75459:8b1637a2

Events : 42

Number Major Minor RaidDevice State

0 253 16 0 active sync /dev/vdb

2 253 48 1 spare rebuilding /dev/vdd

rebuild Status : 48% complete shows the current array recovery state.

spare rebuilding /dev/vdd shows which disk is being added to the array.

After rebuilding the array, check its state:

State : clean

Active Devices : 2

Working Devices : 2

Failed Devices : 0

Spare Devices : 05. How to add or remove disks to Software RAID on Linux

i. If we need to remove the previously created mdadm RAID device, we can use the following command to unmount it:

# umount /backupii. Then run the following command:

# mdadm -S /dev/md0

mdadm: stopped /dev/md0iii. After destroying the RAID array, it will not be detected as a separate disk device:

# mdadm -S /dev/md0

mdadm: error opening /dev/md0: No such file or directoryiv. We can scan all connected drives and recreate a previously removed (failed) RAID device according to the metadata on physical drives.

For this we can run the following command:

# mdadm --assemble —scanv. And if we want to remove an operable drive from an array and replace it, first tag the drive as a failed one using the following command:

# mdadm /dev/md0 --fail /dev/vdcvi. Then remove it using the following command:

# mdadm /dev/md0 --remove /dev/vdcvii. Finally, we can add a new disk, just like in case of a failed drive using the following command:

# mdadm /dev/md0 --add /dev/vdd6. How to add a Hot-Spare Drive to an MDADM Array ?

We can add an extra hot-spare drive to quickly rebuild the RAID array if one of the active disks fails.

i. Firstly, add a free disk to the md device we want using the following command:

# mdadm /dev/md0 --add /dev/vdcThis disk will be shown as a spare disk while checking the status of RAID

ii. To make sure that the hot-swap works, mark any drive as failed and check the RAID status using the following:

# mdadm /dev/md0 --fail /dev/vdbAfter checking, we can see that the rebuilding of the array has started.

iii. For adding an additional operable disk to the RAID, we must follow these two steps.

a. Add an empty drive to the array:

# mdadm /dev/md0 --add /dev/vdbb. Now this disk will be displayed as hot-spare. To make it active, expand the md RAID device:

# mdadm -G /dev/md0 —raid-devices=3Then the array will be rebuilt.

After the rebuild, all the disks become active:

Number Major Minor RaidDevice State

3 253 32 0 active sync /dev/vdc

2 253 48 1 active sync /dev/vdd

4 253 16 2 active sync /dev/vdb7. How to remove an MDADM RAID Array

If we want to permanently remove our software RAID drive, use the following scheme:

# umount /backup – unmount the array from the directory

# mdadm -S /dev/md0 — stop the RAID deviceThen clear all superblocks on the disks it was built of:

# mdadm --zero-superblock /dev/vdb

# mdadm --zero-superblock /dev/vdc[Need Assistance with setting up software RAID on Linux? We are available 24*7. ]

Conclusion

This article covers how to Configure software RAID on Linux using MDADM.

To Install a Software Raid Management Tool:

To install mdadm, run the installation command:

1. For CentOS/Red Hat (yum/dnf is used): $ yum install mdadm

2. For Ubuntu/Debian: $ apt-get install mdadm

3. SUSE: $ sudo zypper install mdadm

4. Arch Linux: $ sudo pacman -S mdadm

Terms related to Integrity of a RAID Array:

1. Version – the metadata version

2. Creation Time – the date and time of RAID creation

3. Raid Level – the level of a RAID array

4. Array Size – the size of the RAID disk space

5. Used Dev Size – the space size used by devices

6. Raid Device – the number of disks in the RAID

7. Total Devices – is the number of disks added to the RAID

8. State – is the current state (clean — it is OK)

9. Active Devices – number of active disks in the RAID

10. Working Devises – number of working disks in the RAID

11. Failed Devices – number of failed devices in the RAID

12. Spare Devices – number of spare disks in the RAID

13. Consistency Policy – is the parameter that sets the synchronization type after a failure, rsync is a full synchronization after RAID array recovery (bitmap, journal, ppl modes are available)

14. UUID – raid array identifier

To Recovering from a Disk Failure in RAID, Disk Replacement:

If one of the disks in a RAID failed or damaged, you may replace it with another one. First of all, find out if the disc is damaged and needs to be replaced.

# cat /proc/mdstat

To Add or Remove Disks to Software RAID on Linux:

1. If you need to remove the previously created mdadm RAID device, unmount it:

# umount /backup

2. Then run this command:

# mdadm -S /dev/md0

mdadm: stopped /dev/md0

3. After destroying the RAID array, it won’t detected as a separate disk device:

# mdadm -S /dev/md0

mdadm: error opening /dev/md0: No such file or directory

4. You can scan all connected drives and re-create a previously removed (failed) RAID device according to the metadata on physical drives. Run the following command:

# mdadm --assemble —scan

About Mdmonitor: RAID State Monitoring & Email Notifications:

The mdmonitor daemon can be used to monitor the status of the RAID.

1. First, you must create the /etc/mdadm.conf file containing the current array configuration:

# mdadm –detail –scan > /etc/mdadm.conf

The mdadm.conf file is not created automatically. You must create and update it manually.

2. Add to the end of /etc/mdadm.conf the administrator email address to which you want to send notifications in case of any RAID problems:

MAILADDR raidadmin@woshub.com

3. Then restart mdmonitor service using systemctl:

# systemctl restart mdmonitor

Then the system will notify you by e-mail if there are any mdadm errors or faulty disks.

This article covers how to Configure software RAID on Linux using MDADM.

To Install a Software Raid Management Tool:

To install mdadm, run the installation command:

1. For CentOS/Red Hat (yum/dnf is used): $ yum install mdadm

2. For Ubuntu/Debian: $ apt-get install mdadm

3. SUSE: $ sudo zypper install mdadm

4. Arch Linux: $ sudo pacman -S mdadm

Terms related to Integrity of a RAID Array:

1. Version – the metadata version

2. Creation Time – the date and time of RAID creation

3. Raid Level – the level of a RAID array

4. Array Size – the size of the RAID disk space

5. Used Dev Size – the space size used by devices

6. Raid Device – the number of disks in the RAID

7. Total Devices – is the number of disks added to the RAID

8. State – is the current state (clean — it is OK)

9. Active Devices – number of active disks in the RAID

10. Working Devises – number of working disks in the RAID

11. Failed Devices – number of failed devices in the RAID

12. Spare Devices – number of spare disks in the RAID

13. Consistency Policy – is the parameter that sets the synchronization type after a failure, rsync is a full synchronization after RAID array recovery (bitmap, journal, ppl modes are available)

14. UUID – raid array identifier

To Recovering from a Disk Failure in RAID, Disk Replacement:

If one of the disks in a RAID failed or damaged, you may replace it with another one. First of all, find out if the disc is damaged and needs to be replaced.

# cat /proc/mdstat

To Add or Remove Disks to Software RAID on Linux:

1. If you need to remove the previously created mdadm RAID device, unmount it:

# umount /backup

2. Then run this command:

# mdadm -S /dev/md0

mdadm: stopped /dev/md0

3. After destroying the RAID array, it won’t detected as a separate disk device:

# mdadm -S /dev/md0

mdadm: error opening /dev/md0: No such file or directory

4. You can scan all connected drives and re-create a previously removed (failed) RAID device according to the metadata on physical drives. Run the following command:

# mdadm --assemble —scan

About Mdmonitor: RAID State Monitoring & Email Notifications:

The mdmonitor daemon can be used to monitor the status of the RAID.

1. First, you must create the /etc/mdadm.conf file containing the current array configuration:

# mdadm –detail –scan > /etc/mdadm.conf

The mdadm.conf file is not created automatically. You must create and update it manually.

2. Add to the end of /etc/mdadm.conf the administrator email address to which you want to send notifications in case of any RAID problems:

MAILADDR raidadmin@woshub.com

3. Then restart mdmonitor service using systemctl:

# systemctl restart mdmonitor

Then the system will notify you by e-mail if there are any mdadm errors or faulty disks.