Are you trying to set up Nginx ingress on DigitalOcean Kubernetes with cert-manager?

This guide is for you.

Kubernetes Ingresses allow you to flexibly route traffic from outside your Kubernetes cluster to Services inside of your cluster.

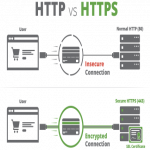

This is accomplished using Ingress Resources, which define rules for routing HTTP and HTTPS traffic to Kubernetes Services, and Ingress Controllers, which implement the rules by load balancing traffic and routing it to the appropriate backend Services.

Here at Ibmi Media, as part of our Server Management Services, we regularly help our Customers to resolve DigitalOcean related queries.

In this context, we shall look into how to set up Nginx ingress on DigitalOcean.

How to set up Nginx ingress on DigitalOcean Kubernetes with cert-manager ?

Now let's take a look at how our Support Experts set up Nginx ingress.

Step 1 — Setting Up Dummy Backend Services

First, we shall create two dummy echo Services so that we can route external traffic using the Ingress. This echo Services will run the hashicorp/http-echo webserver container.

On the local machine, we create and edit a file called echo1.yaml:

$ nano echo1.yamlThen we paste the below code:

apiVersion: v1kind: Servicemetadata:name: echo1spec:ports:- port: 80targetPort: 5678selector:app: echo1---apiVersion: apps/v1kind: Deploymentmetadata:name: echo1spec:selector:matchLabels:app: echo1replicas: 2template:metadata:labels:app: echo1spec:containers:- name: echo1image: hashicorp/http-echoargs:- "-text=echo1"ports:- containerPort: 5678Here, in the above code we define a Service called echo1 which routes traffic to Pods with the app: echo1 label selector.

It accepts TCP traffic on port 80 and routes it to port 5678, http-echo’s default port.

Then we save and close the file.

After that, we create the Kubernetes resources using kubectl apply with the -f flag, specifying the file we just saved as a parameter:

$ kubectl apply -f echo1.yamlWe then verify that the Service started correctly by confirming that it has a ClusterIP, the internal IP on which the Service is exposed:

$ kubectl get svc echo1As a result, we can see echo1 Service is up and running. So, now we can repeat the same on echo2 Service.

For that, we create and open a file called echo2.yaml.

Then we add the below code:

apiVersion: v1kind: Servicemetadata:name: echo2spec:ports:- port: 80targetPort: 5678selector:app: echo2---apiVersion: apps/v1kind: Deploymentmetadata:name: echo2spec:selector:matchLabels:app: echo2replicas: 1template:metadata:labels:app: echo2spec:containers:- name: echo2image: hashicorp/http-echoargs:- "-text=echo2"ports:- containerPort: 5678We then save and close the file.

Then we create the Kubernetes resources using kubectl:

$ kubectl apply -f echo2.yamlOnce again, we verify that the Service is up and running by running the below command.

$ kubectl get svcAs a result, we can see both the echo1 and echo2 Services with assigned ClusterIPs.

Step 2 — Setting Up the Kubernetes Nginx Ingress Controller

Now, we shall roll out v0.34.1 of the Kubernetes-maintained Nginx Ingress Controller.

Let's start by creating the Nginx Ingress Controller Kubernetes resources. These consist of ConfigMaps containing the Controller’s configuration, Role-based Access Control (RBAC) Roles to grant the Controller access to the Kubernetes API, and the actual Ingress Controller Deployment.

To create the resources, use kubectl apply and the -f flag to specify the manifest file hosted on GitHub:

$ kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v0.34.1/deploy/static/provider/do/deploy.yamlThen we confirm that the Ingress Controller Pods have started by running the below command.

$ kubectl get pods -n ingress-nginx \$ -l app.kubernetes.io/name=ingress-nginx --watchThen we hit CTRL+C to return to the prompt.

Now, confirm that the DigitalOcean Load Balancer was successfully created by fetching the Service details with kubectl:

$ kubectl get svc --namespace=ingress-nginxAfter sometime, we can see an external IP address corresponding to the IP address of the DigitalOcean Load Balancer.

This load balancer receives traffic on HTTP and HTTPS ports 80 and 443, and forwards it to the Ingress Controller Pod.

Step 3 — Creating the Nginx Ingress Resource

Here we shall create a minimal Ingress Resource to route traffic directed at a given subdomain to a corresponding backend Service.

First, we open a file called echo_ingress.yaml in your favorite editor:

$ nano echo_ingress.yamlThen we paste the below ingress definition in echo_ingress.yaml:

apiVersion: networking.k8s.io/v1beta1kind: Ingressmetadata:name: echo-ingressspec:rules:- host: echo1.example.comhttp:paths:- backend:serviceName: echo1servicePort: 80- host: echo2.example.comhttp:paths:- backend:serviceName: echo2servicePort: 80Once done, we save and close the file.

Now, we create the Ingress using kubectl:

$ kubectl apply -f echo_ingress.yamlTo test the Ingress, we navigate to DNS management service and create A records for echo1.example.com and echo2.example.com pointing to the DigitalOcean Load Balancer's external IP.

After creating it, we can test the Ingress Controller and Resource we've created.

From the local machine, curl the echo1 Service:

$ curl echo1.example.comAs a result, we must get an output that displays 'echo1'. This will confirm that the request to echo1.example.com is being correctly routed through the Nginx ingress to the echo1 backend Service.

Now, we perform the same test for the echo2 Service:

$ curl echo2.example.comAs a result, we must get an output that displays ‘echo2’.

Now, finally, we have set up a minimal Nginx Ingress to perform virtual host-based routing.

Step 4 — Installing and Configuring Cert-Manager

Now, we shall install v0.16.1 of cert-manager into our cluster. For that, we run the below command.

$ kubectl apply --validate=false -f https://github.com/jetstack/cert-manager/releases/download/v0.16.1/cert-manager.yamlThen we verify the installation by running the below command.

$ kubectl get pods --namespace cert-managerThen, we can create a test ClusterIssuer to make sure the certificate provisioning mechanism is functioning correctly.

For that, we open a file named staging_issuer.yaml in any text editor:

$ nano staging_issuer.yamlInto it, we add the below ClusterIssuer manifest:

apiVersion: cert-manager.io/v1alpha2kind: ClusterIssuermetadata:name: letsencrypt-stagingnamespace: cert-managerspec:acme:# The ACME server URLserver: https://acme-staging-v02.api.letsencrypt.org/directory# Email address used for ACME registrationemail: your_email_address_here# Name of a secret used to store the ACME account private keyprivateKeySecretRef:name: letsencrypt-staging# Enable the HTTP-01 challenge providersolvers:- http01:ingress:class: nginxThen we roll out the ClusterIssuer using kubectl:

$ kubectl create -f staging_issuer.yamlAs a result, we must see a successful creation output. We now repeat this process to create the production ClusterIssuer.

For that, we open a file called prod_issuer.yaml.

$ nano prod_issuer.yamlThen we paste the below code:

apiVersion: cert-manager.io/v1alpha2kind: ClusterIssuermetadata:name: letsencrypt-prodnamespace: cert-managerspec:acme:# The ACME server URLserver: https://acme-v02.api.letsencrypt.org/directory# Email address used for ACME registrationemail: your_email_address_here# Name of a secret used to store the ACME account private keyprivateKeySecretRef:name: letsencrypt-prod# Enable the HTTP-01 challenge providersolvers:- http01:ingress:class: nginxThen we save and close the file.

After that, we roll out this Issuer using kubectl:

$ kubectl create -f prod_issuer.yamlAs a result, we must a successful creation output.

Step 5 — Issuing Staging and Production Let’s Encrypt Certificates

To issue a staging TLS certificate for our domains, we’ll annotate echo_ingress.yaml with the ClusterIssuer created in Step 4.

For that, we open echo_ingress.yaml:

$ nano echo_ingress.yamlThen we add the below Ingress resource manifest:

apiVersion: networking.k8s.io/v1beta1kind: Ingressmetadata:name: echo-ingressannotations:cert-manager.io/cluster-issuer: "letsencrypt-staging"spec:tls:- hosts:- echo1.example.com- echo2.example.comsecretName: echo-tlsrules:- host: echo1.example.comhttp:paths:- backend:serviceName: echo1servicePort: 80- host: echo2.example.comhttp:paths:- backend:serviceName: echo2servicePort: 80We then save and close the file.

Now, we push this update to the existing Ingress object using kubectl apply:

$ kubectl apply -f echo_ingress.yamlAs a result, we see a configured message.

Then we can use kubectl describe to track the state of the Ingress changes we’ve just applied:

$ kubectl describe ingressAfter the successful creation of the certificate, we can now run a describe on it to further confirm its successful creation:

$ kubectl describe certificateNow, we run the following wget command to send a request to echo1.example.com and print the response headers to STDOUT:

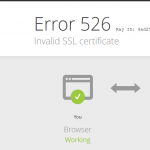

$ wget --save-headers -O- echo1.example.comAs a result, we can see that the HTTPS has successfully been enabled. But the certificate cannot be verified as it’s a fake temporary certificate issued by the Let’s Encrypt staging server.

After testing with the temporary fake certificate, we can roll out production certificates for the two hosts echo1.example.com and echo2.example.com. For that, we will use the letsencrypt-prod ClusterIssuer.

We update echo_ingress.yaml to use letsencrypt-prod:

$ nano echo_ingress.yamlThe code in it must be as below:

apiVersion: networking.k8s.io/v1beta1kind: Ingressmetadata:name: echo-ingressannotations:cert-manager.io/cluster-issuer: "letsencrypt-prod"spec:tls:- hosts:- echo1.example.com- echo2.example.comsecretName: echo-tlsrules:- host: echo1.example.comhttp:paths:- backend:serviceName: echo1servicePort: 80- host: echo2.example.comhttp:paths:- backend:serviceName: echo2servicePort: 80Here, we update the ClusterIssuer name to letsencrypt-prod.

We then save and close the file.

Roll out the changes using kubectl apply:

$ kubectl apply -f echo_ingress.yamlWe wait a couple of minutes for the Let's Encrypt production server to issue the certificate.

Then we track the progress using kubectl describe on the certificate object:

$ kubectl describe certificate echo-tlsAs a result, we can see the certificate has been issued successfully.

We'll now perform a test using curl to verify that HTTPS is working correctly:

$ curl echo1.example.comThen we run curl on https://echo1.example.com:

$ curl https://echo1.example.comAs a result, we can see a message 'echo1'.

At this point, we've successfully configured HTTPS using a Let's Encrypt certificate for the Nginx Ingress.

[Need urgent assistance with DigitalOcean related queries? - We are here to help you. ]

Conclusion

This article covers how to set up Nginx ingress on DigitalOcean Kubernetes with cert-manager. Popular Ingress Controllers include Nginx, Contour, HAProxy, and Traefik. Ingresses provide a more efficient and flexible alternative to setting up multiple LoadBalancer services, each of which uses its own dedicated Load Balancer.

Here, you will learn how to set up an Nginx Ingress to load balance and route external requests to backend Services inside of your Kubernetes cluster.

You also secured the Ingress by installing the cert-manager certificate provisioner and setting up a Let's Encrypt certificate for two host paths.

Most Ingress Controllers use only one global Load Balancer for all Ingresses, which is more efficient than creating a Load Balancer per every Service you wish to expose.

Helm is a package manager for managing Kubernetes. Using Helm Charts with your Kubernetes provides configurability and lifecycle management to update, rollback, and delete a Kubernetes application.

Once you’ve set up the Ingress, you’ll install Cert Manager to your cluster to be able to automatically provision Let’s Encrypt TLS certificates to secure your Ingresses.

This article covers how to set up Nginx ingress on DigitalOcean Kubernetes with cert-manager. Popular Ingress Controllers include Nginx, Contour, HAProxy, and Traefik. Ingresses provide a more efficient and flexible alternative to setting up multiple LoadBalancer services, each of which uses its own dedicated Load Balancer.

Here, you will learn how to set up an Nginx Ingress to load balance and route external requests to backend Services inside of your Kubernetes cluster.

You also secured the Ingress by installing the cert-manager certificate provisioner and setting up a Let's Encrypt certificate for two host paths.

Most Ingress Controllers use only one global Load Balancer for all Ingresses, which is more efficient than creating a Load Balancer per every Service you wish to expose.

Helm is a package manager for managing Kubernetes. Using Helm Charts with your Kubernetes provides configurability and lifecycle management to update, rollback, and delete a Kubernetes application.

Once you’ve set up the Ingress, you’ll install Cert Manager to your cluster to be able to automatically provision Let’s Encrypt TLS certificates to secure your Ingresses.