Are you trying to deploy a Django Application with Kubernetes?

This guide is for you.

Since Kubernetes is a powerful open-source container orchestrator that automates the deployment, scaling, and management of containerized applications we will use it to deploy a Scalable and Secure Django Application.

Django is a powerful web framework that can help you get your Python application off the ground quickly.

It includes several convenient features like an object-relational mapper, user authentication, and a customizable administrative interface for your application.

It also includes a caching framework and encourages clean app design through its URL Dispatcher and Template system.

Here at Ibmi Media, as part of our DigitalOCean Management Services, we regularly help our Customers to perform Kubernetes related queries.

In this context, we shall look into how to deploy a containerized Django polls application into a Kubernetes cluster.

Django Application with Kubernetes

In order to begin, our Support Experts suggest having the following:

1. A Kubernetes 1.15+ cluster with role-based access control (RBAC). This setup will use a DigitalOcean Kubernetes cluster.

2. Install and configure the kubectl command-line tool on the local machine to connect to the cluster.

3. A registered domain name.

4. Install and configure an ingress-nginx Ingress Controller and the cert-manager TLS certificate manager.

5. A DNS record with your_domain.com pointing to the Ingress Load Balancer’s public IP address.

6. An S3 object storage bucket such as a DigitalOcean Space to store the Django project's static files and a set of Access Keys for this Space.

7. A PostgreSQL server instance, database, and user for the Django app.

8. A DigitalOcean Managed PostgreSQL cluster.

9. A Docker Hub account and public repository.

10. Install the Docker engine on the local machine.

Steps to deploy Django Application with Kubernetes ?

1. Clone and Configure the Application

We can find the application code and Dockerfile in the polls-docker branch of the Django Tutorial Polls App GitHub repository.

The polls-docker branch contains a Dockerized version of this Polls app.

i. Initially, we use git to clone the polls-docker branch of the Django Tutorial Polls App GitHub repository to the local machine:

$ git clone –single-branch –branch polls-docker https://github.com/do-community/django-polls.gitii. Then we navigate into the django-polls directory:

$ cd django-pollsiii. In the directory, inspect the Dockerfile:

$ cat Dockerfileiv. FROM python:3.7.4-alpine3.10

ADD django-polls/requirements.txt /app/requirements.txtRUN set -ex \&& apk add –no-cache –virtual .build-deps postgresql-dev build-base \&& python -m venv /env \&& /env/bin/pip install –upgrade pip \&& /env/bin/pip install –no-cache-dir -r /app/requirements.txt \&& runDeps=”$(scanelf –needed –nobanner –recursive /env \| awk ‘{ gsub(/,/, “\nso:”, $2); print “so:” $2 }’ \| sort -u \| xargs -r apk info –installed \| sort -u)” \&& apk add –virtual rundeps $runDeps \&& apk del .build-deps

ADD django-polls /appWORKDIR /app

ENV VIRTUAL_ENV /envENV PATH /env/bin:$PATH

EXPOSE 8000

CMD [“gunicorn”, “–bind”, “:8000”, “–workers”, “3”, “mysite.wsgi”]v. Now, we build the image using docker build:

$ docker build -t polls .vi. Once done, we list available images using docker images:

$ docker imagesREPOSITORY TAG IMAGE ID CREATED SIZEpolls latest 80ec4f33aae1 2 weeks ago 197MBpython 3.7.4-alpine3.10 f309434dea3a 8 months ago 98.7MBvii. Before we run the Django container, we need to configure its running environment using the env file present in the current directory.

Open the env file in any editor:

$ nano django-polls/envDJANGO_SECRET_KEY=DEBUG=TrueDJANGO_ALLOWED_HOSTS=DATABASE_ENGINE=postgresql_psycopg2DATABASE_NAME=pollsDATABASE_USERNAME=DATABASE_PASSWORD=DATABASE_HOST=DATABASE_PORT=STATIC_ACCESS_KEY_ID=STATIC_SECRET_KEY=STATIC_BUCKET_NAME=STATIC_ENDPOINT_URL=DJANGO_LOGLEVEL=infoviii. Fill in missing values for the following keys:

-DJANGO_SECRET_KEY: A unique, unpredictable value.-DJANGO_ALLOWED_HOSTS: It secures the app and prevents HTTP Host header attacks. For testing purposes, set this to *, a wildcard that will match all hosts. In production you should set this to your_domain.com.-DATABASE_USERNAME: The PostgreSQL database user created.-DATABASE_NAME: Set this to polls or the name of the PostgreSQL database created.-DATABASE_PASSWORD: The PostgreSQL user password created.-DATABASE_HOST: Database’s hostname.-DATABASE_PORT: Set this to the database’s port.-STATIC_ACCESS_KEY_ID: The Space or object storage’s access key.-STATIC_SECRET_KEY: Set this to the Space or object storage’s access key Secret.-STATIC_BUCKET_NAME: Set this to the Space name or object storage bucket.-STATIC_ENDPOINT_URL: Appropriate Spaces or object storage endpoint URL.ix. Once done, save and close the file.

2. Create the Database Schema and Upload Assets to Object Storage

i. We use docker run to override the CMD set in the Dockerfile and create the database schema using the manage.py makemigrations and manage.py migrate commands:

$ docker run –env-file env polls sh -c “python manage.py makemigrations && python manage.py migrate”ii. We run the polls:latest container image, pass in the environment variable file and override the Dockerfile command with sh -c “python manage.py makemigrations & python manage.py migrate”.

This will create the database schema defined by the app code.

We will have an output similar to:

No changes detectedOperations to perform:Apply all migrations: admin, auth, contenttypes, polls, sessionsRunning migrations:Applying contenttypes.0001_initial… OKApplying auth.0001_initial… OKApplying admin.0001_initial… OKApplying admin.0002_logentry_remove_auto_add… OKApplying admin.0003_logentry_add_action_flag_choices… OKApplying contenttypes.0002_remove_content_type_name… OKApplying auth.0002_alter_permission_name_max_length… OKApplying auth.0003_alter_user_email_max_length… OKApplying auth.0004_alter_user_username_opts… OKApplying auth.0005_alter_user_last_login_null… OKApplying auth.0006_require_contenttypes_0002… OKApplying auth.0007_alter_validators_add_error_messages… OKApplying auth.0008_alter_user_username_max_length… OKApplying auth.0009_alter_user_last_name_max_length… OKApplying auth.0010_alter_group_name_max_length… OKApplying auth.0011_update_proxy_permissions… OKApplying polls.0001_initial… OKApplying sessions.0001_initial… OKThis indicates that the action was successful.

iii. Then, we will run another instance of the app container and use an interactive shell inside of it to create an administrative user for the Django project.

$ docker run -i -t –env-file env polls shiv. This will give a shell prompt inside of the running container to create the Django user:

$ python manage.py createsuperuserEnter the details for the user, then hit CTRL+D to quit the container and kill it.

v. Finally, we will generate the static files for the app and upload them to the DigitalOcean Space using collectstatic.

$ docker run –env-file env polls sh -c “python manage.py collectstatic –noinput”121 static files copied.vi. We can now run the app:

$ docker run –env-file env -p 80:8000 polls[2019-10-17 21:23:36 +0000] [1] [INFO] Starting gunicorn 19.9.0[2019-10-17 21:23:36 +0000] [1] [INFO] Listening at: http://0.0.0.0:8000 (1)[2019-10-17 21:23:36 +0000] [1] [INFO] Using worker: sync[2019-10-17 21:23:36 +0000] [7] [INFO] Booting worker with pid: 7[2019-10-17 21:23:36 +0000] [8] [INFO] Booting worker with pid: 8[2019-10-17 21:23:36 +0000] [9] [INFO] Booting worker with pid: 9Here, we run the default command defined in the Dockerfile and expose container port 8000 so that port 80 on the local machine gets mapped to port 8000 of the polls container.

We will be able to navigate to the polls app using a web browser.

Type, http://localhost in the URL bar. Since there is no route defined for the / path, you will likely receive a 404 Page Not Found error.

vii. Then navigate to http://localhost/polls to see the Polls app interface.

To view the administrative interface, visit http://localhost/admin.

Enter the administrative username and password and we can access the Polls app’s administrative interface.

viii. Then, hit CTRL+C in the terminal window running the Docker container to kill the container.

3. Push the Django App Image to Docker Hub

Here, we will push the Django image to the public Docker Hub repository created.

We can also push the image to a private repository.

i. To begin, login to Docker Hub on the local machine:

$ docker loginLogin with your Docker ID to push and pull images from Docker Hub.

In case we don’t have a Docker ID, we can create it from https://hub.docker.com

The Django image currently has the polls:latest tag.

ii. To push it to the Docker Hub repo, re-tag the image with the Docker Hub username and repo name:

$ docker tag polls:latest your_dockerhub_username/your_dockerhub_repo_name:latestiii. Then push the image to the repo:

$ docker push bob/bob-django:latestSince the image is available to Kubernetes on Docker Hub, we can begin rolling it out in the cluster.

4. Set Up the ConfigMap

In Kubernetes, we can inject configuration variables using ConfigMaps and Secrets.

i. Initially, we create a directory called yaml to store our Kubernetes manifests. Navigate into the directory.

$ mkdir yaml$ cdii. In any text editor open the file, polls-configmap.yaml:

$ nano polls-configmap.yamliii. Then paste the following ConfigMap manifest:

apiVersion: v1kind: ConfigMapmetadata:name: polls-configdata:DJANGO_ALLOWED_HOSTS: “*”STATIC_ENDPOINT_URL: “https://your_space_name.space_region.digitaloceanspaces.com”STATIC_BUCKET_NAME: “your_space_name”DJANGO_LOGLEVEL: “info”DEBUG: “True”DATABASE_ENGINE: “postgresql_psycopg2”We have extracted the non-sensitive configuration from the env file and pasted it into a ConfigMap manifest.

iv. Copy in the same values entered into the env file in the previous step.

For test purposes, leave DJANGO_ALLOWED_HOSTS as * to disable Host header-based filtering. In a production environment, we set this to the app’s domain.

v. Once done, save and close it.

vi. Then we create the ConfigMap in the cluster using kubectl apply:

$ kubectl apply -f polls-configmap.yamlconfigmap/polls-config created5. Set Up the Secret

Secret values must be base64-encoded. We can repeat the process from the previous step, manually base64-encoding Secret values and pasting them into a manifest file.

On the other hand, we can create them using an environment variable file, kubectl create, and the –from-env-file flag. We will see this in this step.

We will once again use the env file, removing variables inserted into the ConfigMap.

i. Make a copy of the env file called polls-secrets in the yaml directory:

$ cp ../env ./polls-secretsii. In any text editor, edit the file:

$ nano polls-secretsDJANGO_SECRET_KEY=DEBUG=TrueDJANGO_ALLOWED_HOSTS=DATABASE_ENGINE=postgresql_psycopg2DATABASE_NAME=pollsDATABASE_USERNAME=DATABASE_PASSWORD=DATABASE_HOST=DATABASE_PORT=STATIC_ACCESS_KEY_ID=STATIC_SECRET_KEY=STATIC_BUCKET_NAME=STATIC_ENDPOINT_URL=DJANGO_LOGLEVEL=infoiii. Delete all the variables inserted into the ConfigMap manifest. Our output should look like this:

DJANGO_SECRET_KEY=your_secret_keyDATABASE_NAME=pollsDATABASE_USERNAME=your_django_db_userDATABASE_PASSWORD=your_django_db_user_passwordDATABASE_HOST=your_db_hostDATABASE_PORT=your_db_portSTATIC_ACCESS_KEY_ID=your_space_access_keySTATIC_SECRET_KEY=your_space_access_key_secretiv. Make sure to use the same values used earlier. Once done, save and close the file.

v. Create the Secret in the cluster using kubectl create secret:

$ kubectl create secret generic polls-secret –from-env-file=poll-secretssecret/polls-secret createdvi. We can inspect the Secret using kubectl describe:

$ kubectl describe secret polls-secretName: polls-secretNamespace: defaultLabels: <none>Annotations: <none>

Type: Opaque

Data====DATABASE_PASSWORD: 8 bytesDATABASE_PORT: 5 bytesDATABASE_USERNAME: 5 bytesDJANGO_SECRET_KEY: 14 bytesSTATIC_ACCESS_KEY_ID: 20 bytesSTATIC_SECRET_KEY: 43 bytesDATABASE_HOST: 47 bytesDATABASE_NAME: 5 bytesRight now, we have stored the app’s configuration in the Kubernetes cluster using the Secret and ConfigMap object types.

6. Roll Out the Django App Using a Deployment

Moving ahead, we will create a Deployment for our Django app.

Deployments control one or more Pods. Pods enclose one or more containers.

i. In any editor, open the file, polls-deployment.yaml:

$ nano polls-deployment.yamlii. Paste in the following Deployment manifest:

apiVersion: apps/v1kind: Deploymentmetadata:name: polls-applabels:app: pollsspec:replicas: 2selector:matchLabels:app: pollstemplate:metadata:labels:app: pollsspec:containers:– image: your_dockerhub_username/app_repo_name:latestname: pollsenvFrom:– secretRef:name: polls-secret– configMapRef:name: polls-configports:– containerPort: 8000name: gunicornFill in the appropriate container image name. Refer to the Django Polls image we pushed to Docker Hub.

Here we define a Kubernetes Deployment called polls-app and label it with the key-value pair app: polls. We specify that we would like to run two replicas of the Pod defined below the template field.

Using envFrom with secretRef and configMapRef, we specify that all the data from the polls-secret Secret and polls-config ConfigMap should inject into the containers as environment variables.

iii. Finally, we expose containerPort 8000 and name it gunicorn.

iv. Eventually, save and close it.

v. Then we create the Deployment in the cluster using kubectl apply -f:

$ kubectl apply -f polls-deployment.yaml$ deployment.apps/polls-app createdvi. Check if the Deployment rolled out correctly using kubectl get:

$ kubectl get deploy polls-appNAME READY UP-TO-DATE AVAILABLE AGEpolls-app 2/2 2 2 6m38svii. If we encounter an error, we can use kubectl describe to inspect the failure:

$ kubectl describe deployviii. We can inspect the two Pods using kubectl get pod:

$ kubectl get podNAME READY STATUS RESTARTS AGEpolls-app-847f8ccbf4-2stf7 1/1 Running 0 6m42spolls-app-847f8ccbf4-tqpwm 1/1 Running 0 6m57s7. Allow External Access using a Service

Here, we will create a Service for thw Django app.

For now, to test that everything is functioning correctly, we will create a temporary NodePort Service to access the Django app.

i. Initially, create the file, polls-svc.yaml using any editor:

$ nano polls-svc.yamlii. Paste in the following Service manifest:

apiVersion: v1kind: Servicemetadata:name: pollslabels:app: pollsspec:type: NodePortselector:app: pollsports:– port: 8000targetPort: 8000iii. Eventually, save and close it.

iv. Roll out the Service using kubectl apply:

$ kubectl apply -f polls-svc.yamlservice/polls createdv. To confirm the Service was created, we use kubectl get svc:

$ kubectl get svc pollsNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEpolls NodePort 10.245.197.189 <none> 8000:32654/TCP 59sThis output shows the Service's cluster-internal IP and NodePort (32654).

vi. To connect to the service, we need the external IP addresses for our cluster nodes:

$ kubectl get node -o wideNAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIMEpool-7no0qd9e0-364fd Ready <none> 27h v1.18.8 10.118.0.5 203.0.113.1 Debian GNU/Linux 10 (buster) 4.19.0-10-cloud-amd64 docker://18.9.9pool-7no0qd9e0-364fi Ready <none> 27h v1.18.8 10.118.0.4 203.0.113.2 Debian GNU/Linux 10 (buster) 4.19.0-10-cloud-amd64 docker://18.9.9pool-7no0qd9e0-364fv Ready <none> 27h v1.18.8 10.118.0.3 203.0.113.3 Debian GNU/Linux 10 (buster) 4.19.0-10-cloud-amd64 docker://18.9.9vii. In a web browser, visit the Polls app using any Node’s external IP address and the NodePort. Given the output above, the app’s URL would be http://203.0.113.1:32654/polls.

We should see the same Polls app interface that we accessed locally.

We can repeat the same test using the /admin route: http://203.0.113.1:32654/admin.

8. Configure HTTPS Using Nginx Ingress and cert-manager

The final step is to secure external traffic to the app using HTTPS.

For that, we will use the ingress-nginx Ingress Controller and create an Ingress object to route external traffic to the polls Kubernetes Service.

i. Before continuing with this step, we should delete the echo-ingress Ingress created already:

$ kubectl delete ingress echo-ingressWe can also delete the dummy Services and Deployments using kubectl delete svc and kubectl delete deploy.

We should also create a DNS A record with your_domain.com pointing to the Ingress Load Balancer’s public IP address.

Once we have an A record pointing to the Ingress Controller Load Balancer, we can create an Ingress for your_domain.com and the polls Service.

ii. In any editor, open the file, polls-ingress.yaml:

$ nano polls-ingress.yamliii. Paste in the following Ingress manifest:

apiVersion: networking.k8s.io/v1beta1kind: Ingressmetadata:name: polls-ingressannotations:kubernetes.io/ingress.class: “nginx”cert-manager.io/cluster-issuer: “letsencrypt-staging”spec:tls:– hosts:– your_domain.comsecretName: polls-tlsrules:– host: your_domain.comhttp:paths:– backend:serviceName: pollsservicePort: 8000iv. Once done, save and close it.

v. Create the Ingress in the cluster using kubectl apply:

$ kubectl apply -f polls-ingress.yamlingress.networking.k8s.io/polls-ingress createdvi. We can use kubectl describe to track the state of the Ingress:

$ kubectl describe ingress polls-ingressName: polls-ingressNamespace: defaultAddress: workaround.your_domain.comDefault backend: default-http-backend:80 (<error: endpoints “default-http-backend” not found>)TLS:polls-tls terminates your_domain.comRules:Host Path Backends—- —- ——–your_domain.compolls:8000 (10.244.0.207:8000,10.244.0.53:8000)Annotations: cert-manager.io/cluster-issuer: letsencrypt-stagingkubernetes.io/ingress.class: nginxEvents:Type Reason Age From Message—- —— —- —- ——-Normal CREATE 51s nginx-ingress-controller Ingress default/polls-ingressNormal CreateCertificate 51s cert-manager Successfully created Certificate “polls-tls”Normal UPDATE 25s nginx-ingress-controller Ingress default/polls-ingressvii. We can run describe on the polls-tls Certificate to confirm its successful creation:

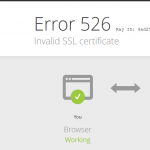

$ kubectl describe certificate polls-tls. . .Events:Type Reason Age From Message—- —— —- —- ——-Normal Issuing 3m33s cert-manager Issuing certificate as Secret does not existNormal Generated 3m32s cert-manager Stored new private key in temporary Secret resource “polls-tls-v9lv9”Normal Requested 3m32s cert-manager Created new CertificateRequest resource “polls-tls-drx9c”Normal Issuing 2m58s cert-manager The certificate has been successfully issuedGiven that we used the staging ClusterIssuer, navigating to your_domain.com will bring us to an error page.

viii. To send a test request, we will use wget from the command-line.

$ wget -O – http://your_domain.com/polls. . .ERROR: cannot verify your_domain.com’s certificate, issued by ‘CN=Fake LE Intermediate X1’:Unable to locally verify the issuer’s authority.

ix. To connect to your_domain.com insecurely, use `–no-check-certificate’.

We can use the –no-check-certificate flag to bypass certificate validation:

$ wget –no-check-certificate -q -O – http://your_domain.com/polls<link rel=”stylesheet” type=”text/css” href=”https://your_space.nyc3.digitaloceanspaces.com/django-polls/static/polls/style.css”><p>No polls are available.</p>Now we can modify the Ingress to use the production ClusterIssuer.

x. Once again open polls-ingress.yaml for editing:

$ nano polls-ingress.yamlxi. Modify the cluster-issuer annotation:

apiVersion: networking.k8s.io/v1beta1kind: Ingressmetadata:name: polls-ingressannotations:kubernetes.io/ingress.class: “nginx”cert-manager.io/cluster-issuer: “letsencrypt-prod”spec:tls:– hosts:– your_domain.comsecretName: polls-tlsrules:– host: your_domain.comhttp:paths:– backend:serviceName: pollsservicePort: 8000xii. Eventually, save and close the file. Update the Ingress using kubectl apply:

$ kubectl apply -f polls-ingress.yamlingress.networking.k8s.io/polls-ingress configuredxiii. The kubectl describe certificate polls-tls and kubectl describe ingress polls-ingress can track the certificate issuance status:

$ kubectl describe ingress polls-ingress. . .Events:Type Reason Age From Message—- —— —- —- ——-Normal CREATE 23m nginx-ingress-controller Ingress default/polls-ingressNormal CreateCertificate 23m cert-manager Successfully created Certificate “polls-tls”Normal UPDATE 76s (x2 over 22m) nginx-ingress-controller Ingress default/polls-ingressNormal UpdateCertificate 76s cert-manager Successfully updated Certificate “polls-tls”

xiv. Navigate to your_domain.com/polls in the web browser to confirm that HTTPS encryption is enabled and everything is working perfectly.

Then we should see the Polls app interface.

Verify that HTTPS encryption is active in the web browser.

As a final cleanup task, we can optionally switch the polls Service type from NodePort to the internal-only ClusterIP type.

xv. Modify polls-svc.yaml using any editor:

$ nano polls-svc.yamlChange the type from NodePort to ClusterIP:apiVersion: v1kind: Servicemetadata:name: pollslabels:app: pollsspec:type: ClusterIPselector:app: pollsports:– port: 8000targetPort: 8000xvi. Once done, save and close it.

xvii. Then roll out the changes using kubectl apply:

$ kubectl apply -f polls-svc.yaml –forceservice/polls configuredxviii. Confirm that the Service was modified using kubectl get svc:

$ kubectl get svc polls

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEpolls ClusterIP 10.245.203.186 <none> 8000/TCP 22sThis output shows that the Service type is now ClusterIP.

The only way to access it is via our domain and the Ingress created.

[Stuck in between deploying Django Application with Kubernetes? We'd be happy to assist. ]

Conclusion

This article covers deploying a Scalable and Secure Django Application. Kubernetes is a powerful open-source container orchestrator.

Kubernetes is a powerful open-source container orchestrator that automates the deployment, scaling and management of containerized applications.

Kubernetes objects like ConfigMaps and Secrets allow you to centralize and decouple configuration from your containers, while controllers like Deployments automatically restart failed containers and enable quick scaling of container replicas.

TLS encryption is enabled with an Ingress object and the ingress-nginx open-source Ingress Controller.

The cert-manager Kubernetes add-on renews and issues certificates using the free Let’s Encrypt certificate authority.

This article covers deploying a Scalable and Secure Django Application. Kubernetes is a powerful open-source container orchestrator.

Kubernetes is a powerful open-source container orchestrator that automates the deployment, scaling and management of containerized applications.

Kubernetes objects like ConfigMaps and Secrets allow you to centralize and decouple configuration from your containers, while controllers like Deployments automatically restart failed containers and enable quick scaling of container replicas.

TLS encryption is enabled with an Ingress object and the ingress-nginx open-source Ingress Controller.

The cert-manager Kubernetes add-on renews and issues certificates using the free Let’s Encrypt certificate authority.